rubys/feedvalidator/master/.htaccess

Options +ExecCGI -MultiViews

AddHandler cgi-script .cgi

AddType image/x-icon ico

RewriteRule ^check$ check.cgi

DirectoryIndex index.html check.cgi

# MAP - bad bots are killing the server (everybody links to their validation results and the bots dutifully crawl it)

RewriteEngine on

RewriteCond %{HTTP_USER_AGENT} BecomeBot [OR]

RewriteCond %{HTTP_USER_AGENT} Crawler [OR]

RewriteCond %{HTTP_USER_AGENT} FatBot [OR]

RewriteCond %{HTTP_USER_AGENT} Feed24 [OR]

RewriteCond %{HTTP_USER_AGENT} Gigabot [OR]

RewriteCond %{HTTP_USER_AGENT} Googlebot [OR]

RewriteCond %{HTTP_USER_AGENT} htdig [OR]

RewriteCond %{HTTP_USER_AGENT} HttpClient

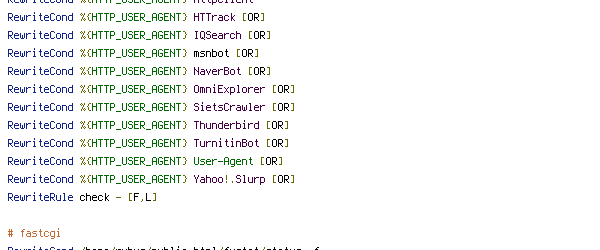

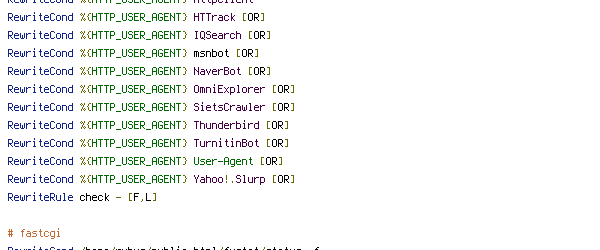

RewriteCond %{HTTP_USER_AGENT} HTTrack [OR]

RewriteCond %{HTTP_USER_AGENT} IQSearch [OR]

RewriteCond %{HTTP_USER_AGENT} msnbot [OR]

RewriteCond %{HTTP_USER_AGENT} NaverBot [OR]

RewriteCond %{HTTP_USER_AGENT} OmniExplorer [OR]

RewriteCond %{HTTP_USER_AGENT} SietsCrawler [OR]

RewriteCond %{HTTP_USER_AGENT} Thunderbird [OR]

RewriteCond %{HTTP_USER_AGENT} TurnitinBot [OR]

RewriteCond %{HTTP_USER_AGENT} User-Agent [OR]

RewriteCond %{HTTP_USER_AGENT} Yahoo!.Slurp [OR]

RewriteRule check - [F,L]

# fastcgi

RewriteCond /home/rubys/public_html/fvstat/status -f

RewriteRule check.cgi(.*)$ http://localhost:8080/rubys/feedvalidator.org/$1 [P]

<Files check.cgi>

Deny from feeds01.archive.org

Deny from www.feedvalidator.org

Deny from new.getfilesfast.com

Deny from gnat.yodlee.com

Deny from master.macworld.com

Deny from 62.244.248.104

Deny from 207.97.204.219

Deny from ik63025.ikexpress.com

Deny from 65-86-180-70.client.dsl.net

Deny from vanadium.sabren.com

</Files>

<Files config.py>

ForceType text/plain

</Files> On Github License

Files

Download PDF of Htaccess file